Use Case: Build Your Custom Article Generator

Build an article writer using PromptChainer as a back-end and open source front-end

When I first started dealing with content marketing and SEO around 2011, it was the time of “Private Blog Networks”. The biggest one back then was “BMR” - Build My Rank. There were a few others for some months until Google decided to go hard on them.

Back then similar to today, many used freelance content writers and those with the gray/black hats used content spinners, somehow those used to work over 10 years ago.

Today, it’s a pretty different story, many are still skeptical about AI content (IDK why) but I’m sure most will agree that even if AI-generated content is not a 100% “publish ready” on autopilot, with the right tweaks, a strong editor to fix mistakes/fact check, and for adding a personal touch, it’s safe to say that the results can be mind-blowing and at high-level content.

If that’s correct then adding to this layer of [ an amazing LLM (for example GPT4) + an experienced editor (cornerstone for every content team) ], custom, accurate, case-specific settings and configurations can lead to amazing content generation on scale operation.

I’m happy to say that it is all possible right now (currently for free), we even built it for you, you can fork the following Github https://github.com/PromptChainer/showcase-article-gen and connect it to PromptChainer’s easily configured “Content Generator Template” Backend, here is a live example!

http://contentgen.promptchainer.io

Let’s go over exactly how we have built it so that you can do the same and even better.

At first, we decided on what variables we wanted to set up for our flow. These variables act as the guiding principles for the LLM (Large Language Model / ChatGPT) and ensure the content it generates aligns with our needs.

The first variable we set was '{subject}'. This variable was designed to guide the LLM in understanding the central theme around which the article should be written. We then set up '{focus_kw}', which helped the LLM to identify keywords and phrases that needed to be included in the article for SEO purposes. We also set up '{target_audience}' to help the LLM tailor the language and tone of the article to suit the specific reader base we were aiming to reach. Lastly, we used '{per_notes}' as a place for personal notes, reminders, and specific guidelines to aid in the content creation process.

Our first action node was to generate a general description of the subject. ChatGPT used the '{subject}' variable as a guide and produced a broad overview of the topic, setting the stage for the rest of the article.

Next, we moved on to our second action node, where the LLM broke the general description down into distinct subtopics. This allowed us to explore different facets of the subject in detail and ensure a comprehensive coverage of the topic.

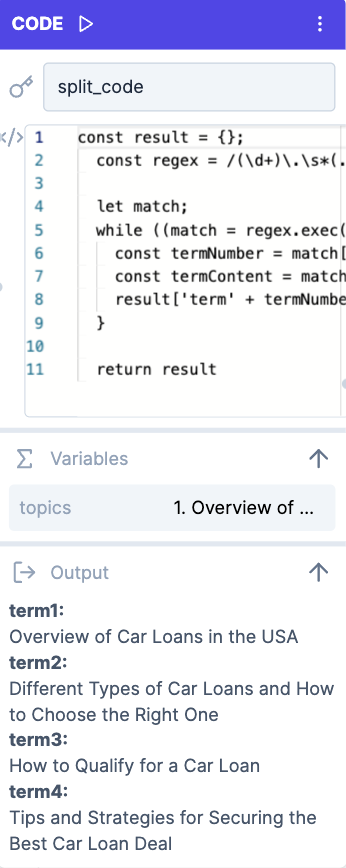

From there, we passed the output through a code node that broke down the larger text into smaller, manageable variables. These variables were then used throughout the rest of the flow to maintain consistency and coherence.

const result = {};

const regex = /(\d+)\.\s*(.*?)(?=\s*(?:\d+\.|$))/g;

let match;

while ((match = regex.exec(topics)) !== null) {

const termNumber = match[1];

const termContent = match[2];

result['term' + termNumber] = termContent.trim();

}

return result

We then made several calls to the LLM through various action nodes to generate the individual paragraphs, titles, FAQs, and the conclusion part. The flow was guided by the initial variables and the subtopic variables created from the code node. It ensured that each part of the content was in sync with the overall theme and context.

Finally, we set up an output node to export the final article. This allowed us to store the generated content in a database or make it available for future API calls, creating a seamless integration with our existing systems and processes.

All in all, the flow we built is a powerful combination of AI and human intelligence. It leverages the capabilities of GPT-4, while keeping the human touch alive through the editor's inputs. Yet, this is just the beginning. The real power of PromptChainer lies in its versatility and adaptability. With it, the possibilities are truly endless.

Imagine chaining together different AI models, each with its unique strengths, to create a powerhouse of AI capabilities. From content generation to customer service, data analysis to design, the sky is the limit when it comes to what you can achieve with PromptChainer. And the beauty of it all? It's all accessible through a user-friendly interface that requires only a basic understanding to operate.