Use Case: Build a Unique Email Reply Assistant

Build a custom tailored reply wizard using PromptChainer as a back-end and open source front-end

One of the best customer service experience that I received as a customer here in Israel is from Wolt, the way / style of communication from their support staff is IMO amazing, I’m guessing it is because of a very refined training program for their people, or maybe because of a very easy to use templates / guides / ready answers for each scenario, the point is, they have somehow “standardized” their answers for any complaint / request of their users. Today this might be easier to implement than ever even on smaller teams using AI.

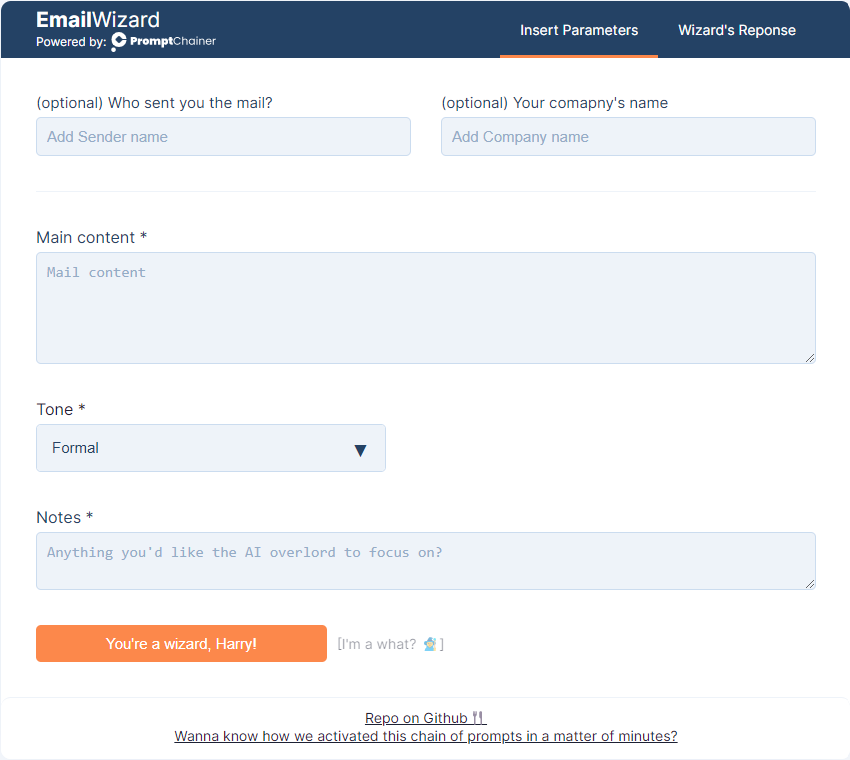

Introducing: emailwizard.promptchainer.io

In this super small and “easy” to use EmailWizard 🧙🏼♂️ use case we have built (and open sourced again) the front-end for you to fork and deploy your own version of this cool and useful Email Reply Wizard. Standardized your team replies to users / new clients, the sky's the limit, with 💬🔗 PromptChainer as the backend, you are now able to chain any custom prompts / add any inputs to this wizard in order to custom tailor it to your own style of language / auto add and relevant business data to each reply that is generated with this wizard.

In order to build your own version you will need to:

1st signup to PromptChainer, open a new flow and load the “MailReply” template.

2nd fork the front-end here:

https://github.com/PromptChainer/showcase-email-wizard

Then add your PromptChainer’s API key + the “MailReply” flow ID and you are done!

Feel free to change the flow according to your specific needs, to help you do that, we are adding here a breakdown of the flow that runs in the backend (feel free to get in touch with us on our live chat or support@promptchainer.io for any questions):

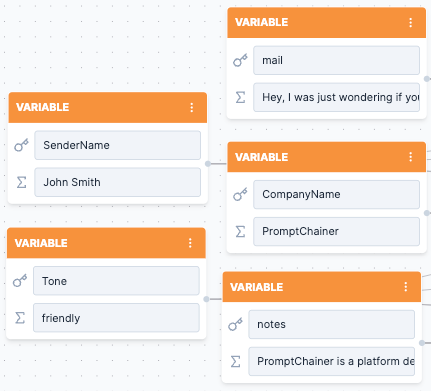

Firstly, we thought about the variables necessary for our flow. These variables act like guiding posts for the Large Language Model (LLM/ChatGPT), ensuring the responses it forms are in line with our expectations.

The first variable we introduced was '{mail}'. This allowed us to directly feed the LLM with the content of the email that required a response. Following that, we incorporated '{sendername}' to lend a touch of personalization to the response. We also made use of '{companyname}' to make the response more relatable in a business context. And lastly, we included '{tone}' to guide the LLM on the appropriate tone to use in the response, ensuring it met professional standards.

We included the '{notes}' variable, as it serves a vital role in making the LLM's output more accurate and relevant. It's an opportunity for the user to include a short, distilled summary of what they want the AI to focus on in the reply. The notes don't need to be lengthy or complicated. Instead, they should be concise, clear and to the point, serving as guideposts for the AI to craft a well-rounded reply.

Think of '{notes}' as a way to streamline the process by bringing the essential elements into the spotlight. For example, if a user is having login issues, wondering about product functionality, and inquiring about pricing, the personal notes could be something like: "Address login issues - suggest checking connection and router reset. Talk about upcoming functionality updates next week. Inform that the product will transition from free to paid next month."

This approach reinforces the notion that while AI is highly proficient at understanding and generating complex language, the human touch is necessary to pinpoint the specifics and ensure the nuances are captured. By integrating these brief but meaningful directives, we can more effectively leverage the AI's capabilities while ensuring that the replies maintain their relevance and accuracy.

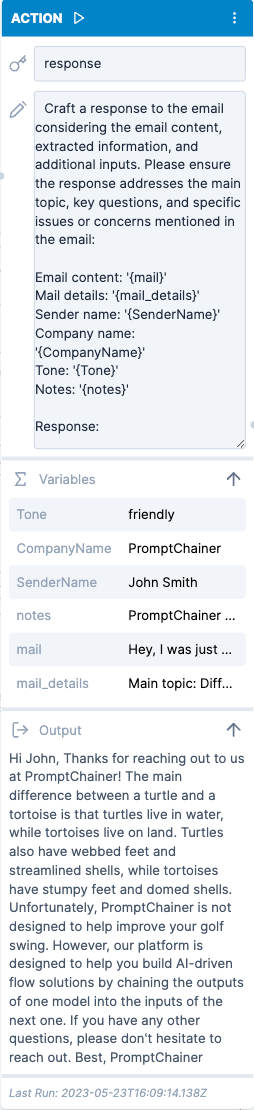

The initial action in our sequence was to extract the main points from the incoming email. Using the '{mail}' variable, the LLM was able to identify key elements and set the foundation for an appropriate response.

The subsequent step involved crafting an initial response to the email. At this point, the LLM used the '{sendername}', '{companyname}', '{tone}', and '{notes}' variables to draft a response that not only addressed the main points but also did so in a contextually relevant manner.

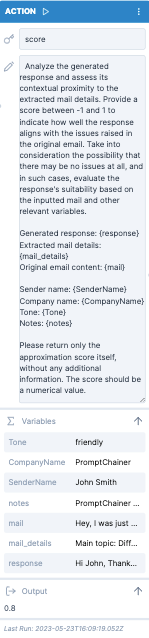

After the response generation, we introduced an LLM-based evaluation step. This involves prompting the LLM with a dedicated task to assign a score to the generated response. The scoring scale ranges from -1 to 1, which illustrates the contextual alignment of the response to the initial email's content.

In this method - we let the LLM to assess the coherence, relevancy, and completeness of the generated response in relation to the content of the received mail. This scoring allows us to objectively determine the quality of our LLM-generated repli

Based on this score, we incorporated conditional nodes to determine the next course of action. If the score exceeded 0.5, the response was deemed satisfactory, and the flow was terminated. However, if the score was 0.5 or less, the flow proceeded to the next step, which involved enhancing the response.

The final step involved refining the response, applicable only when the initial score was low. The objective here was to augment the response such that it better addressed the requirements outlined in the original email.

If both conditional nodes lead to a negative outcome, implying that the LLM could not generate an adequately scoring reply even after a second attempt, we take an important final step. We use the LLM to generate insights about the failure.

Finally, we configured an output node to dispatch the final response, either the first draft or the refined version, depending on the scoring system's verdict. This allowed for easy integration of the responses into our existing email system.

In summary, the flow we created is a fantastic blend of AI capabilities and human intervention. It leverages the computational power of the GPT-4 model while maintaining a human touch via the variables and scoring system. However, this is just the tip of the iceberg. The true potential of PromptChainer lies in its adaptability and flexibility. The sky's the limit with what you can do.